Why?

I messed about with few different stacks for running a web server on pi, and most are complicated, in particular being complicated to configure as well as being large complicated animals that provide a lot of functionality (and overheads) that were of no real use to me. I wanted something that was:

- simple to install / set up

- suitable for use as an 'inside' home web service (i.e. not exposed to the nasty world outside)

- able to run reasonably fast

- really simple to use (from python in particular)

Some very simple ways to do this use cgi, but I soon found this method awkward to use as well as looking like there were significant performance overheads. I switched to

http.server.HTTPServer which I like and provides a 'shape' of framework I am comfortable with.

What?

On a LAN connected raspberry pi 3 this approach will happily serve up to 200 requests per second - as long as the overall network bandwidth doesn't get too high.

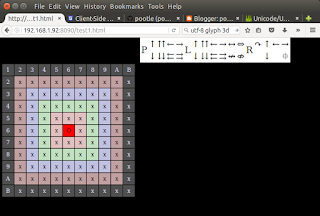

The test I used serves up images on demand to create a virtual movie. It is driven from javascript in the web page. The individual images were just under 20k bytes on average.

I wanted to minimise the load on the Raspberry Pi and keep the user interface simple to use and responsive. To do this the python web server is very simple and - after serving up the initial page - just responds to requests from the client.

The web page implements the user interface control functions in javascript and fires off requests to the web server.

The web server runs happily on Raspberry Pi (including zero) and on my ubuntu laptop. It appears to work well with Firefox, Chrome and Internet Explorer on laptops / PCs. It does not work in Edge, but as I have little interest in Windoze , I'm not really interested in the ie / edge use.

It will run on reasonably fast phones / tablets, but not at high framerates; my old Galaxy S2 isn't much use, but a Hudl2 works well as long as the framerate is kept low.

This is just a proof of concept, so presentation and error handling are minimal / non-existent, and functionality is limited.

How?

There are 2 files:

- a python3 file (the server) of around 100 lines

- an html file (the client) of around 200 lines

The simple webserver builds an index to all the jpeg files in the given folder, and serves a web page which then allows various javascript functions to move around within the images and play them back at various speeds by controlling framerate and stride.